# code for loading the format for the notebook

import os

# path : store the current path to convert back to it later

path = os.getcwd()

os.chdir(os.path.join('..', '..', 'notebook_format'))

from formats import load_style

load_style(plot_style=False)

os.chdir(path)

# 1. magic for inline plot

# 2. magic to print version

# 3. magic so that the notebook will reload external python modules

# 4. magic to enable retina (high resolution) plots

# https://gist.github.com/minrk/3301035

%matplotlib inline

%load_ext watermark

%load_ext autoreload

%autoreload 2

%config InlineBackend.figure_format='retina'

import time

import fasttext

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# prevent scientific notations

pd.set_option('display.float_format', lambda x: '%.3f' % x)

%watermark -a 'Ethen' -d -t -v -p numpy,pandas,matplotlib,fasttext,scipy

Product Quantization for Model Compression¶

Product Quantization or often times PQ for short is an extremely popular algorithm for compressing vectors/embeddings and performing approximate nearest neighborhood search. In this example, we'll be using fasttext to illustrate the concept. The library and the data preparation step has already been introduced in another documentation, hence we won't be spending too much on them.

Data Preparation and Model¶

# download the data and un-tar it under the 'data' folder

# -P or --directory-prefix specifies which directory to download the data to

!wget https://dl.fbaipublicfiles.com/fasttext/data/cooking.stackexchange.tar.gz -P data

# -C specifies the target directory to extract an archive to

!tar xvzf data/cooking.stackexchange.tar.gz -C data

!head -n 3 data/cooking.stackexchange.txt

# train/test split

from fasttext_module.split import train_test_split_file

from fasttext_module.utils import prepend_file_name

data_dir = 'data'

test_size = 0.2

input_path = os.path.join(data_dir, 'cooking.stackexchange.txt')

input_path_train = prepend_file_name(input_path, 'train')

input_path_test = prepend_file_name(input_path, 'test')

random_state = 1234

encoding = 'utf-8'

train_test_split_file(input_path, input_path_train, input_path_test,

test_size, random_state, encoding)

print('train path: ', input_path_train)

print('test path: ', input_path_test)

# train the fasttext model

fasttext_params = {

'input': input_path_train,

'lr': 0.1,

'lrUpdateRate': 1000,

'thread': 8,

'epoch': 15,

'wordNgrams': 1,

'dim': 80,

'loss': 'ova'

}

model = fasttext.train_supervised(**fasttext_params)

print('vocab size: ', len(model.words))

print('label size: ', len(model.labels))

print('example vocab: ', model.words[:5])

print('example label: ', model.labels[:5])

model_checkpoint = os.path.join('model', 'model.fasttext')

model.save_model(model_checkpoint)

# model.get_input_matrix().shape

print('output matrix shape: ', model.get_output_matrix().shape)

model.get_output_matrix()

Product Quantization from Scratch¶

The main goal behind compression is that after training our model, the model size is too large for deployment. e.g. when deploying on edge devices where we have limited memory at hand or would require using a bigger machine which ultimately incur more infrastructure costs.

In this situation, product quantization is a compression technique for compressing embeddings. It has been shown that it significantly reduce the size of the model without hurting the performance by much. And without further a due, we'll dive right into how this technique works.

Learning Code Book¶

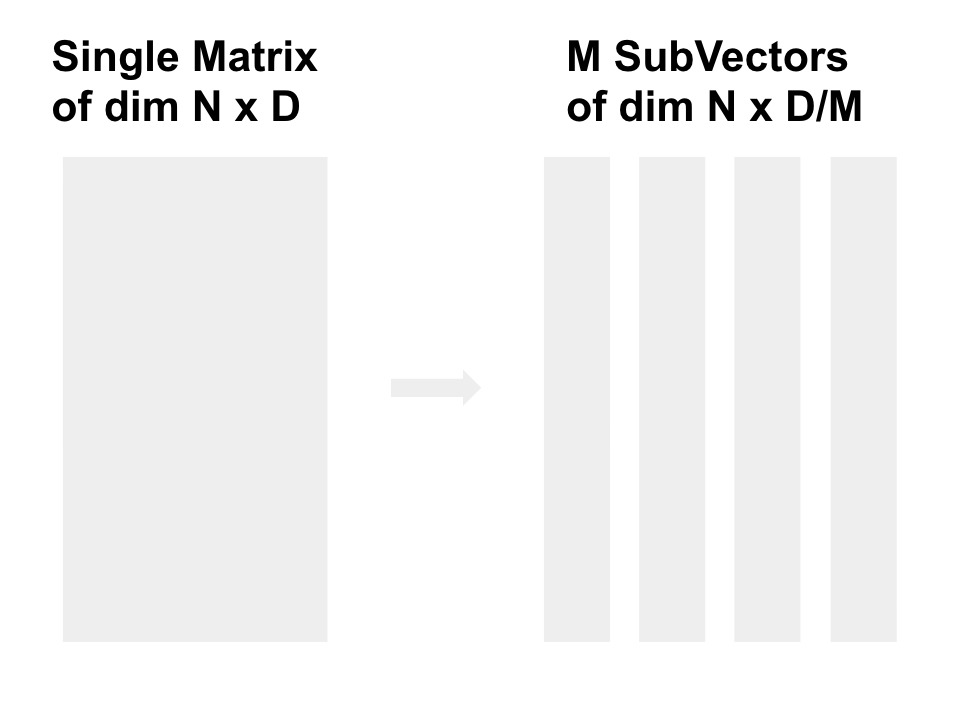

Our first step is to split the a $d$ dimensional vectors/matrix $\mathbf{X}$ into $m$ distinct subvectors $u_j$, $1 \leq j \leq m$ of dimension $d^* = d / m$, where $d$ should ideally be multiple of $m$.

As an example, say our original embedding has a dimension of 1000x80, we can split it into 4 subvectors, each having a dimension of 1000x20.

Next, we'll run a k-means clustering algorithm on each subvectors. Upon computing the cluster centroid for each of $k$ cluster and $m$ subvectors, we would have on our hand, $m$ cluster centroids, each of size $k \times d^*$. In other words, reducing the dimension from $n \times d$ into $m \times k \times d^* = k \times d$

So we'll apply k-means on our 1000x20 subvectors. If $k$ is 10, we would end up having 10 cluster centroids each of size 20. Since we have 4 subvectors, we would have 4 of those.

Information theory nomenclature is often times used to explain these concepts. Where:

- The cluster centroids is referred to as codebook.

- The cluster index is called a code, a reproduction value, a reconstruction value.

We'll be using scipy vector quantization module's k-means clustering implementation in the example code.

from scipy.cluster.vq import kmeans2, vq

def compute_code_books(vectors, sub_size=2, n_cluster=128, n_iter=20, minit='points', seed=123):

n_rows, n_cols = vectors.shape

n_sub_cols = n_cols // sub_size

np.random.seed(seed)

code_books = np.zeros((sub_size, n_cluster, n_sub_cols), dtype=np.float32)

for subspace in range(sub_size):

sub_vectors = vectors[:, subspace * n_sub_cols:(subspace + 1) * n_sub_cols]

centroid, label = kmeans2(sub_vectors, n_cluster, n_iter, minit=minit)

code_books[subspace] = centroid

return code_books

sub_size = 2 # m

n_cluster = 64 # k

# learning the cluster centroids / code books for our output matrix/embedding

code_books = compute_code_books(model.get_output_matrix(), sub_size, n_cluster)

print('code book size: ', code_books.shape)

Encode¶

The cluster centroids for each of the subvectors represents the average/common pattern of each subvectors, and what we're going to do is to replace the original vector with the cluster centroid of each subvectors. The effect of doing so is instead of storing the original floating values for every record in our dataset, we'll be replacing it with the closest cluster centroid id within each subvectors.

i.e. At the end, we'll be "compressing" our original vector of size $n \times d$ into a size of $n \times m$

def encode(vectors, code_books):

n_rows, n_cols = vectors.shape

sub_size = code_books.shape[0]

n_sub_cols = n_cols // sub_size

codes = np.zeros((n_rows, sub_size), dtype=np.int32)

for subspace in range(sub_size):

sub_vectors = vectors[:, subspace * n_sub_cols:(subspace + 1) * n_sub_cols]

code, dist = vq(sub_vectors, code_books[subspace])

codes[:, subspace] = code

return codes

# our original embedding now becomes the cluster centroid for each subspace

vector_codes = encode(model.get_output_matrix(), code_books)

print('encoded vector codes size: ', vector_codes.shape)

vector_codes

We can calculate the potential size/memory savings if we were to go from storing the original vector into storing the encoded codes and the code book.

(vector_codes.nbytes + code_books.nbytes) / model.get_output_matrix().nbytes

Instead of directly compressing the original embedding, we can also learn the codebooks and compress all new incoming embeddings on the fly.

Computing Query Distance¶

We can also compute nearest neighbors using the compressed vectors.

# we'll get one of the labels to find its nearest neighbors

label_id = 0

print(model.labels[label_id])

query = model.get_output_matrix()[label_id]

query.shape

# printing out the shape of the code book to hopefully make it easier

code_books.shape

To do so, we'll be computing the distance between each subspace of the query with the cluster centroid of each subspace, giving us a $m \times k$ distance table, where each one denotes the squared Euclidean distance between the $m_{th}$ subvector of the query and the $k_{th}$ code/cluster centroid for that $m_{th}$ subvector.

def query_dist_table(query, code_books):

sub_size, n_cluster, n_sub_cols = code_books.shape

dist_table = np.zeros((sub_size, n_cluster))

for subspace in range(sub_size):

sub_query = query[subspace * n_sub_cols:(subspace + 1) * n_sub_cols]

diff = code_books[subspace] - sub_query.reshape(1, -1)

diff = np.sum(diff ** 2, axis=1)

dist_table[subspace, :] = diff

return dist_table

dist_table = query_dist_table(query, code_books)

print(dist_table.shape)

dist_table[:, :5]

Then assuming for original vector is already encoded in advance, we can lookup the distances for each cluster centroid and add them up.

# lookup the distance

dists = np.sum(dist_table[range(sub_size), vector_codes], axis=1)

dists[:5]

# the numpy indexing trick is equivalent to the following loop approach

n_rows = vector_codes.shape[0]

dists = np.zeros(n_rows).astype(np.float32)

for n in range(n_rows):

for m in range(sub_size):

dists[n] += dist_table[m][vector_codes[n][m]]

dists[:5]

# find the nearest neighbors and "translate" it to the original labels

k = 5

nearest = np.argsort(dists)[:k]

[model.labels[label] for label in nearest]

The approach illustrated here is more of a naive approach as it still involves calculating the distances to all the vectors, which can still be inefficient for large $n$ (number of data points). We won't be discussing how to speed up the nearest neighborhood search process for product quantization as this documentation is more focused on the compression aspect of it.

Fasttext Product Quantization¶

Fasttext comes with built-in capabilities for doing model compression using product quantization. We'll experiment with different options/parameter and measure the model performance and model size. i.e. compression ratio v.s. model performance dip.

The next couple of code chunks defines the functions to measure the model performance and model file size.

from typing import Dict

def score(input_path_train: str,

input_path_test: str,

model: fasttext.FastText._FastText,

k: int,

round_digits: int=3) -> Dict[str, float]:

file_path_dict = {

'train': input_path_train,

'test': input_path_test

}

result = {}

for group, file_path in file_path_dict.items():

num_records, precision_at_k, recall_at_k = model.test(file_path, k)

f1_at_k = 2 * (precision_at_k * recall_at_k) / (precision_at_k + recall_at_k)

metric = {

f'{group}_precision@{k}': round(precision_at_k, round_digits),

f'{group}_recall@{k}': round(recall_at_k, round_digits),

f'{group}_f1@{k}': round(f1_at_k, round_digits)

}

result.update(metric)

return result

k = 1

result = score(input_path_train, input_path_test, model, k)

result

def compute_file_size(file_path: str) -> str:

"""

Calculate the file size and format it into a human readable string.

References

----------

https://stackoverflow.com/questions/2104080/how-can-i-check-file-size-in-python

"""

file_size = compute_raw_file_size(file_path)

file_size_str = convert_bytes(file_size)

return file_size_str

def compute_raw_file_size(file_path: str) -> int:

"""Calculate the file size in bytes."""

file_info = os.stat(file_path)

return file_info.st_size

def convert_bytes(num: int) -> str:

"""Convert bytes into more human readable MB, GB, etc."""

for unit in ['bytes', 'KB', 'MB', 'GB', 'TB']:

if num < 1024.0:

return "%3.1f %s" % (num, unit)

num /= 1024.0

compute_file_size(model_checkpoint)

For this part of the experiment, we'll tweak the parameter, dimension of subvector, dsub. Remember that this is one of main parameter that controls the tradeoff between the compression ratio and amount of distortion (deviation from the original vector).

dsubs = [-1, 2, 4, 8]

results = []

for dsub in dsubs:

# ensure we are always loading from the original model,

# i.e. do not over-ride the model_checkpoint variable

fasttext_model = fasttext.load_model(model_checkpoint)

if dsub > 0:

dir_name = os.path.dirname(model_checkpoint)

model_path = os.path.join(dir_name, f'model_quantized_dsub{dsub}.fasttext')

# qnorm, normalized the vector and quantize it

fasttext_model.quantize(dsub=dsub, qnorm=True)

fasttext_model.save_model(model_path)

else:

model_path = model_checkpoint

result = score(input_path_train, input_path_test, fasttext_model, k)

result['dsub'] = dsub

result['file_size'] = compute_raw_file_size(model_path)

results.append(result)

df_results = pd.DataFrame.from_dict(results)

df_results

We can visualize the table results. Our main observation is that setting dsub to 2 seems to give the most memory reduction while preserving most of the model's performance.

# change default style figure and font size

plt.rcParams['figure.figsize'] = 15, 6

plt.rcParams['font.size'] = 12

fig, (ax1, ax2) = plt.subplots(1, 2)

fig.suptitle('Fasttext Quantization Experiments')

ax1.plot(df_results['dsub'], df_results['file_size'])

ax1.set_title('dsub versus file size')

ax1.set_xlabel('dsub')

ax1.set_ylabel('file size (bytes)')

ax2.plot(df_results['dsub'], df_results['test_precision@1'])

ax2.set_title('dsub versus test precision@1')

ax2.set_xlabel('dsub')

ax2.set_ylabel('precision@1')

plt.show()

The .quantize method also provide other options that we did not use here such as:

- Whether to quantize the output matrix,

qout - Whether to retrain the model's vector after doing the quantization,

retrain.

Here we also list down the other compression tidbits from the original paper.

- Quantize the Norm fasttext has a parameter

qnormto normalize of the vector and also quantize that norm. This often times leads to a lesser drop in accuracy. - Retrain after Quantization: This suggests a bottom-up learning strategy where we first quantize the input matrix, then retrain and quantize the output matrix (the input matrix being frozen).

- Vocabulary Pruning: Upon training the model, we can remove features that do not play a large role in the model. For each document, we verify if it is already covered by a retained feature and, if not, we add the feature with the highest norm to our set of retained features. If the number of features is below some user specified threshold, we add the features with the highest norm that have not yet been picked.

- Choice of subvectors: We observe in practice that using $k = d/2$ subvectors, i.e., half of the components of the embeddings, works well in practice. Using less subquantizers significantly decreases the performance for a small memory gain.

Product Quantization Recap¶

To wrap up, we'll do a recap of product quantization, this time using a bit more notation.

The idea behind product quantization is to compress our original matrix into compact codes, where the comparison of the compact codes approximates the comparison in the original space. In the process of doing so, we are essentially retaining the most useful information within our matrix while discarding the less-relevant ones.

We have an original matrix $\mathbf{X} = [\mathbf{x}^1, \mathbf{x}^2, ..., \mathbf{x}^d]$, where $\mathbf{x}^i \in \mathbb{R}^n$. The input matrix will first be split into $m$ distinct sub-matrix, $\mathbf{U}^j$, $1 \leq j \leq m$, each of dimension $d^* = d / m$, where $d$ should ideally be multiple of $m$.

\begin{align} \underbrace{\mathbf{x}^1, ..., \mathbf{x}^{d^*}}_{\mathbf{U}^1}, ..., \underbrace{\mathbf{x}^{d - d^* + 1}, ..., \mathbf{x}^{d}}_{\mathbf{U}^m} \end{align}Then a product quantizer function, $q(\cdot)$, is defined as a concatenation of sub-quantizer.

\begin{align} q(\mathbf{X}) = \big[q^1(\mathbf{U}^1), q^2(\mathbf{U}^2), ..., q^M(\mathbf{U}^m)\big] \end{align}Where each sub-quantizer, $q^j$, is usually a low complexity quantizer/clustering algorithm, such as k-means. In other words, each sub-quantizer learns a sub-codebook/sub-cluster centroids $C^j$ comprises of $k$ centroids each of size $d^*$. Then the quantizer would map an input into its respective code/centroid under each sub-matrix and the final representation would be the concatenation of $m$ centroids.

\begin{align} \mathbf{c} = [\mathbf{c}^1, \mathbf{c}^2, ..., \mathbf{c}^m] \in C = C^1 \times C^2, ..., C^m \end{align}Typically, the number of cluster centroids, $k$, is set to 256 so that each code/centroid can be represented by 8 bits.

Reference¶

- Github: Nano Product Quantization (nanopq)

- Blog: Product Quantizers for k-NN Tutorial Part 1

- Paper: A. Joulin, E. Grave, P. Bojanowski, M. Douze, H. Jegou, T. Mikolov - FastText.zip: Compressing text classification models (2016)

- Paper: H. Jegou, M. Douze, and C. Schmid. - Product quantization for nearest neighbor search (2011)